One thing I've noticed about you, dear readers, is that you enjoy a good hack and ways to save time in your work. The PNP posts that receive the most views are those that suggest ways to make your job easier, lighter, or more efficient. This comes as no surprise given that many of us work more than 40 hours per week on research, teaching, and service. On top of that, many of us are overachievers with high expectations for ourselves, so we want to do more, but our time is finite. The neoliberal academy always demands more work from us, and our own standards of excellence can sometimes lead to exhaustion, overwork, and burnout. Read more about those realities here.

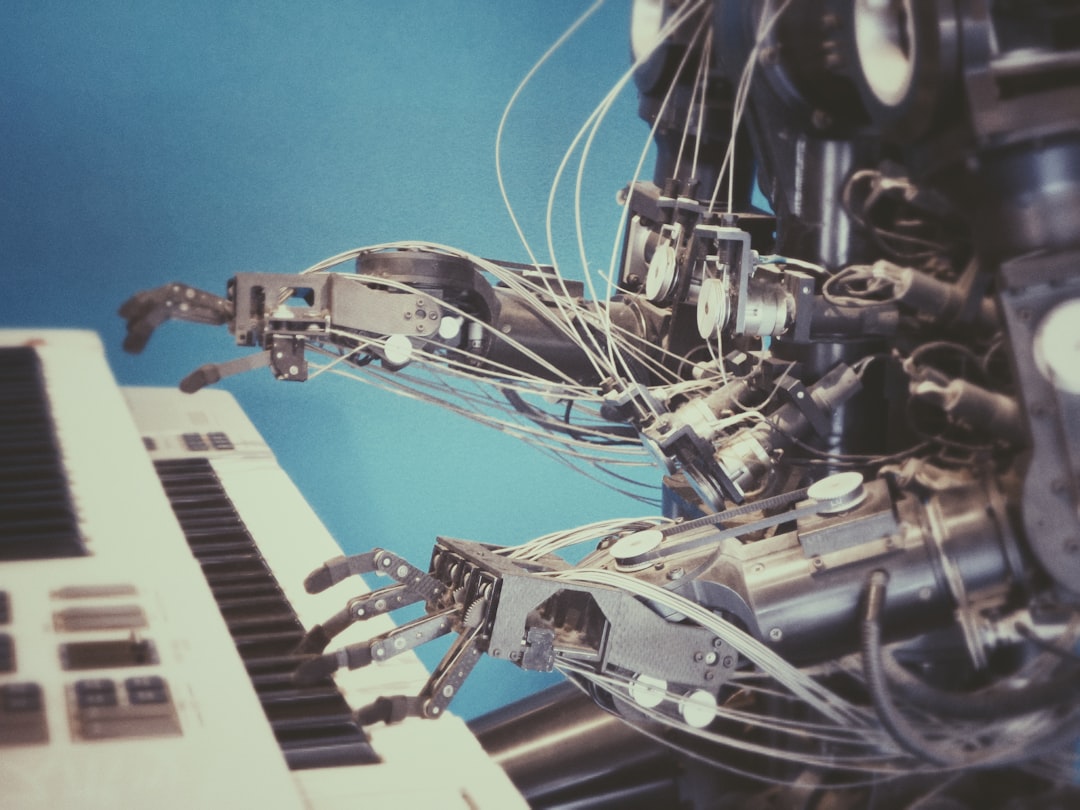

The prospect of using artificial intelligence (AI) tools for academic writing and other tasks is alluring because of their ability to offer us support, shortcuts, and time-saving strategies. I've previously stated that, while I share many of the concerns expressed across the academy and the world writ large about the rapid influx of AI into our lives and the absence of ethical safeguards, I am also excited about the potential it conjures. Specifically, AI tools can save precious time and even make my work better.

In this post, I'll describe how I'm currently using AI as a personal assistant for various tasks that I used to do on my own.

If you're interested in my overall thoughts on the ethics and practice of using AI in academic writing, I recommend starting with this post first:

The Editor

I use a tool called QuillBot to edit all my PNP posts, emails, and academic papers. QuillBot is an AI-powered tool that can help you rephrase and reword your text while maintaining the original meaning. I think of Quillbot as my personal, on-demand copyeditor. I use it to improve the flow and meaning of my sentences. It improves the fluency of my words while still retaining my personal voice. It also has the ability to use “formal” or “simple” language depending on your intended audience.

I’ll give you a few examples of how QuillBot can revise text for different effects. Here’s a sentence that could use some help in terms of spelling, grammar, and structure:

Some folks are aprehensive about using QuillBot or other AI tools in academic work, because they feel that using artificiail intellegence is somehow cheating at their jobs.

Here are QuillBot’s suggestions for revision in “formal” mode, which is what I usually use for academic writing: Some individuals are hesitant to use QuillBot or other AI tools in academic work because they believe that doing so constitutes unethical behavior.

Here are the suggestions in “fluency” mode, which is the mode I use for PNP newsletter posts: Some people are concerned about using QuillBot or other AI tools in academic work because they believe it is cheating at their jobs.

Finally, here’s “shorten” mode, which is what I use when I need to cut words from a document that is too long. Some academics worry that using QuillBot or other AI tools is cheating.

In each of these cases, I can choose whether to accept some or all of the suggested revisions, and I usually cobble together the text that QuillBot revised for clarity with words or turns of phrase that I prefer to use. Like a human copyeditor, I get to decide what revisions I ultimately include. QuillBot is not my ghostwriter but rather my editor.

Shorten mode has really sped up my process for cutting words from a document. I usually avoid shortening too many sentences in a row using this tool because it makes the text feel too staccato, but carefully selecting a few here and there to simplify and shorten actually increases readability while cutting for word count.

I believe Grammarly has many of the same capabilities as QuillBot, but I haven't used the former, so I can't really compare them.

And, as I explained in my first post on AI, I don't believe using AI is “cheating” when it is used as a research assistant or copyeditor.

The Secretary

I experimented with using ChatGPT to convert my notes on undergraduate paper feedback into more coherent, complete, and grammatically correct sentences. I began with a prompt that looked something like this: “Act as a professor. Rewrite these notes into a 200-word summary of feedback on a student’s paper. The tone should be warm, informal, and encouraging. The feedback should include what they did well and what they could improve.” I then pasted my notes for the student, and ChatGPT generated a few short paragraphs of feedback that were clear, well-organized, and heavily based on the notes I gave it.

Like QuillBot, I still have to edit the paragraphs generated by ChatGPT for accuracy and fluency, but this generally speeds up my process so that I can make my notes more coherent for the student more quickly. I'm not using ChatGPT to analyze the paper's merits; rather, I'm using it to help me communicate my own analysis.

You must also be clear about what you expect from a prompt. If I don't specify a warm and informal tone, ChatGPT will default to a formal and even clinical tone because that’s what it thinks professors sound like.

I see ChatGPT as my secretary, to whom I dictate my notes and who writes them up in a more structured format for the reader. I do the hard analytical work, and the secretary helps communicate that work, thereby saving me time. I can see the same method being used for anything where you convert notes into legible text, such as email.

The Librarian

ChatDOC is another tool that I find useful in my work. This tool extracts, locates, and summarizes important information from PDF documents using ChatGPT technology, making it a convenient way to summarize articles. This comes in handy in a variety of situations.

As a reader, I often need a summary of a dense academic text before diving in. I need to understand the point or major argument before I can wade through the nuances and complexities. When I was a graduate student, I would search the internet for summaries of such texts, but those summaries are not always available.

ChatDoc allows me to upload a PDF and then converse with it, asking it questions or giving it prompts. I might write, “Summarize this article in 200 words with a focus on its contribution to media studies.” Unlike ChatGPT, which will sometimes make up content if you ask it to summarize an article, ChatDoc will converse with you using the article itself because you have uploaded it.

It, like other AI tools, is incapable of analyzing an article's argument and suggesting improvements or identifying flaws. In other words, while it can help you understand an article, it cannot do the hard work of chewing on its ideas and determining their utility or merit for your work. Analyzing is a higher-level skill than summarizing.

In the past, I've used research assistants to summarize articles so that I could decide whether or not to read them myself. I see ChatDoc in a similar light. It's like a librarian who can point me in the right direction, but I still have to borrow and read the book. Yet, I still read much faster because I knew the main points and contributions right away.

Learning Curves

As you can probably tell, I've been nerding out on AI tools recently and have experimented with a few out of sheer curiosity. The ones listed above are the ones I've decided to keep in my arsenal. As with any new technology, there is a learning curve to using these tools, and if you are not as nerdy about tech as I am, they may feel laborious to learn. Also, as with anything at Publish Not Perish, experiment, keep what works for you, and discard the rest!

If any of these tools make you nervous about students using AI in your classes to cheat themselves out of critical thinking, I'll go ahead and confirm your fears because we as instructors will have to radically reimagine our assignments in light of these rapidly evolving developments. This Chronicle article describes how students are currently utilizing ChatGPT, for example. I'd also argue that learning to use these tools ethically in our own work will help us teach students to use AI more effectively.

I'm curious if any of you are experimenting with other AI tools in your work! Feel free to share any concerns as well. We love dialogue here at PNP.